BLOBs are cool. Optimized such that you can store files basically in the database, with the power of SQL AND no performance hit. That sounds like a pretty good deal.

I get asked questions a lot about accessing BLOBs once they’re in the database.

And that’s led to quite a few posts here on the subject:

- Viewing BLOBs as pictures

- Viewing BLOBs in External Editors (PDFs, spreadsheets, etc.)

- Including BLOBs in your PDF reports

But today’s question comes from Amy:

Can I export multiple blobs using SQL Developer?

So, short answer is: YES.

The little bit longer answer is: Yes, but be sure to use the SQL*Loader format preference.

And the longest answer is…

Getting your BLOBs out

There are a few ways to make this happen, but they all revolve around using the ‘Loader’ formatter available in the ‘Export’ engine that SQL Developer uses to export data.

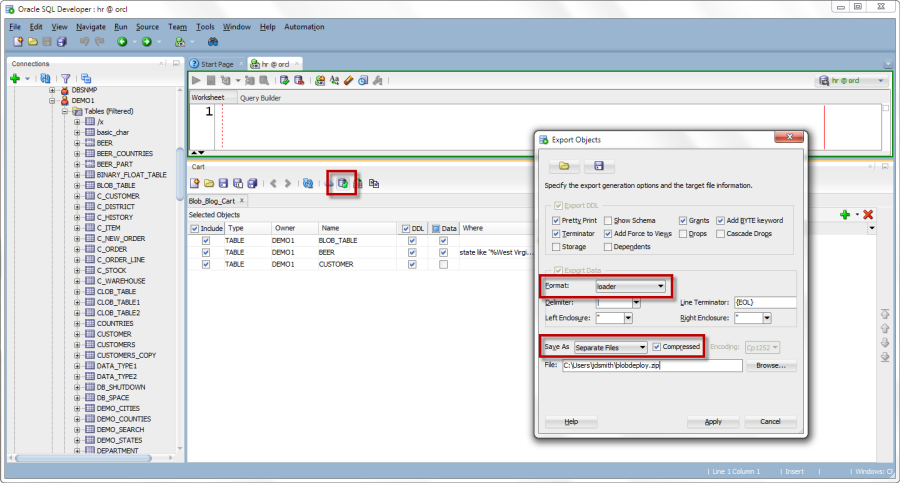

I could right-click on a table, I could use the Tools > Database Export, or I could use the Cart.

I don’t get to talk about the Cart enough, so I’m going to show you how to use the Cart, again.

Open the Cart

This is easy, just access it from the View menu.

Then drag your objects containing the BLOBs to the Cart

Click the ‘Export Cart’ button

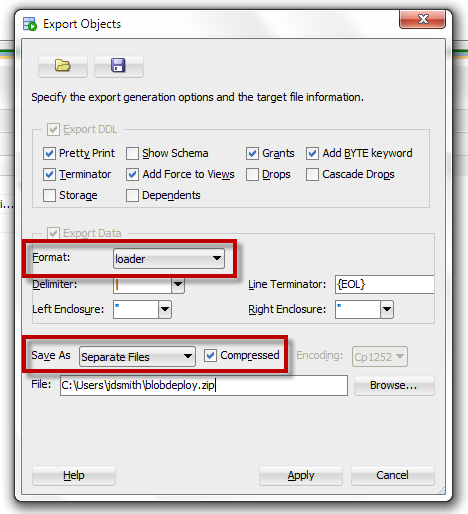

I recommend these specific options:

- Loader – that’s for SQL*Loader

- Separate Files – write out a file for each table (and for the blobs, a file for each of those too)

- Compressed – zip that stuff up

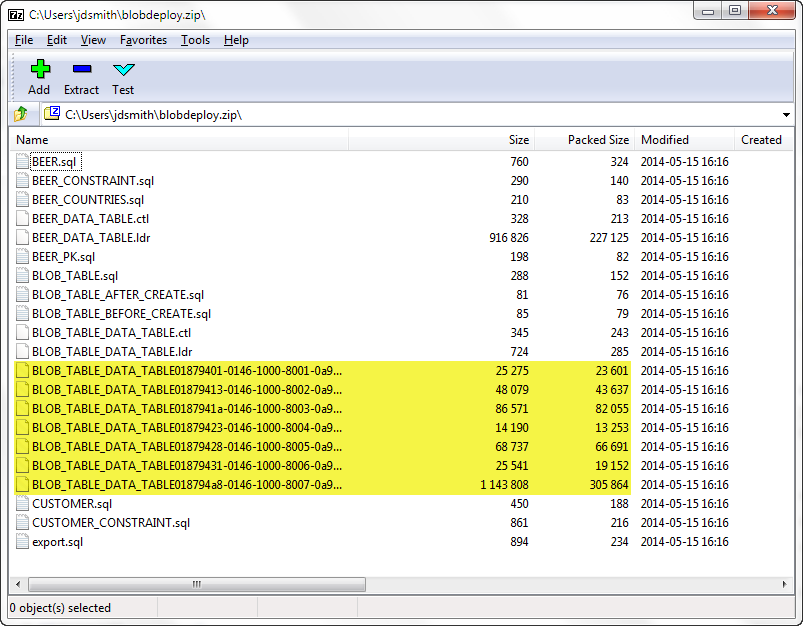

So after this finishes, you’ll have something like this:

There’s a ‘export.sql’ script. That will go and create all of your tables for you – assuming you checked the option to export the DDL as well. And then you would need to launch SQL*Loader to load up the tables as desired.

And if you’re curious about how this mechanism actually worksthis works, check out the DOCS.

36 Comments

Thanks Jeff, very useful!

Hi – I was able to follow this to export a BLOB successfully to produce a file on the filesystem that has the blob value/content (it is a Java keystore file), but what we are looking to do is to REPLACE the BLOB value in the table.

When I exported, I checked the “DDL” checkbox as you suggested and also the “compressed”, so I get the ZIP with a bunch of .SQL files, including one of which is the binary data that was in the BLOB.

If we replace that one binary data file with data from a different binary data file on the filesystem, can we run one of the generated .sql files to re-import the replacement BLOB value?

Thanks – this is SOOOO close to what we have been looking to do!

Jim

no, you can’t run an INSERT to put a BLOB into a row, you’ll need to setup SQL*Loader, or write some application code

Hi Jeff,

Thanks for showing this method.

I managed to extract all the files from the Oracle BLOB successfully.

I exported the files uncompressed.

Then, rename all the file extensions from ldr to whatever format they were loaded (pdf,txt,doc,png,jpg etc).

I change it based on their file size.

For zip file loaded, I can unzipped it and all the files are there too.

Then just double-click to open it.

Cheers!!

Awesome! Thanks for sharing your success!!

Hi Jeff, thanks for your post on the cart feature. I need to export data from queries and this is not possible. Later I discovered the SQLcl that maybe can do what I need. Do you know if there is something (a library, a script or something similar) to emulate the export in loader format with blob in separate files like the SQL Developer do ?

I’m trying to write something to do that (the alternative is to write views for every table with blobs) but I think that if exists something is better to use it and maybe improve it instead reinventing the wheel.

I apologize for my english, thx in advance.

Willing to have a go at some js?

@krisrice wrote this

1 script2 // issue the sql

3 var binds = {}

4 var ret = util.executeReturnList('select id,name,content from images',binds);

5

6 // loop the results

7 for (i = 0; i < ret.length; i++) { 8 // debug is nice 9 ctx.write( ret[i].ID + "\t" + ret[i].NAME+ "\n"); 10 // get the blob stream 11 12 var blobStream = ret[i].CONTENT.getBinaryStream(1); 13 14 // get the path/file handle to write to 15 var path = java.nio.file.FileSystems.getDefault().getPath(ret[i].NAME); 16 17 // dump the file stream to the file 18 java.nio.file.Files.copy(blobStream,path); 19 } 20 / 21 !ls

See the 'script' command in SQLcl, and you'll be off and running.

Hi,

I followed your instructions and I was able to create the compressed zip file with all tables and inserts, but no blobs were included. Actually, in the inserts, the files of the big blobs that should be created, are mentioned, but there isn’t anything on the zip.

Any idea?

Thanks!

What version of the tool are you using? You see any errors in the Log panel?

Hi Jeff,

Great post! SQL Dev tool is indeed loaded with “hidden features”. Been using SQL Dev for 5 yrs+ now and it’s the first time I’m seeing Cart in action.

Can you help me with the following:

– where can I read some more about the Cart functionality of SQL Developer?

– any chance I can export the blobs with a custom file names?

I followed the instructions and generated some files, having generic names and .ldr extension.

Let’s say I have a table with the following structure and content:

ID | File_Name | Content(Blob)

1 | sample1.jpg |

2 | sample2.pdf |

Can the export keep the file extensions?

Regards,

Cristian

I don’t know how to control the file name, at least not w/o writing a shell script to change them after the fact.

Thanks. This is the only solution that is portable in terms of extraction and distribution . It gives the flexibility of delivering components to be deployed in higher environments.

Wow can’t do nothing with these output names, useless

You could always write a sqlcl script, we have examples on GitHub, ain’t nothing wrong with that I hope

Can you provide one of your example SqlCl scripts to handle renaming the output files?

Hi Jeff,

it seems that this is also exporting virtual columns. Is there a way to prevent this in 4.1.5?

Thank you & Best regards

Salek

Write a query and export that, or create a view and export the view.

Ok, I really could have thought of the VIEW-solution myself.

Thank you,

Salek

I have exported the contents to deploy.zip . How do I import the data using the exported file. Do u have any document for that.

are you familiar with SQL*Loader?

Yes. Without the compress option , I see multiple files with control file. Will use the same control file and will use sql loader to load it. Now it is clear . Thanks for the prompt response.

thanks… useful info… i was able to export using cart and import blob data with above steps using sqlldr… only thing i did not do is check compressed box as whenever i checked compressed, blob data file did not generate in the zip file. So i left compressed option unchecked & blob data file generated.

SQL developer version: Version 4.0.2.15 Build 15.21

Hi Jeff,

I have something I’ve been struggling with for a bit of time. I have a very large table (172GB ) in Oracle (version 11.2) that I need to export and load to another database. I’ve been using the export utility of SQL Developer (version 4.1.1) with Loader as the format. Next I ran a compress and got the data down to 152Gb. The utility runs fine, however, since the export is so large, I would like to be able to export into multiple directories. This way I can zip each directory and upload to my AWS S3 bucket in say 5 or 10GB chunks.

I’m hoping to be able to continue using SQL Developer and loader as the format as my scripts are running perfectly with .ldr and I’m unloading BLOB data.

Is there a way to perform this?

Much appreciation in advance,

Jennifer

HOW EXPORT AND IMPORT BLOB COLUMN DATA OVER TWO TABLES AMONG TWO DIFFERENT SERVERS BY USING ORACLE SQL DEVELOPER ..??? IS IT POSSIBLE?? AND HOW??

It would be great to be able to export the blobs with useful names (using the keys value from the table) like Toad does. If this was possible we could finally use Toad for extracting all data and giving to customers as needed.

Why can’t you use SQL Developer today?

Hi there, this looks like something we can use, I was checking out the docs (above link) but got a 404.

http://docs.oracle.com/cd/E11882_01/server.112/e10701/ldr_loading.htm#SUTIL1268

Can you please let me know where the doc is.

Thanks

So ALL of the Oracle Docs links out there on blogs, no longer work as our doc site changed. Oh well.

Try this one.

Thanks for the fast reply and fixing the link! I have another question. I’m using sql developer across a vpn, to connect to my 10g aix server (exporting) and my 11g rhel server (importing). So I am assuming I need to have sql developer run locally in an x-session so that I can get my deploy file containing the lobs created locally on the AIX server, ftp over to the RHEL then run sqlloader plus the .sql generated in the deploy.zip to load the blobs. Is that right?

Thanks Cathy

SQL*Loader is client-server – you can serve up the data from anywhere to any database. It’ll just be a LOT faster if you stage it up on the local box.

Hi Jeff,

I recently found out about this possibilty by playing around with the export settings.

But: Do I get it right that the exported BLOBs always get a generated name?

In our tables we usually store the original file names. As these are exported as well in the loader file I can quite easily build a Windows PowerShell script to rename all the files after the export, but I wondered whether there is a better way of achieving this solely with SQL Developer.

(For those who want to know the PowerShell command:

Rename-Item “.ldr” “”)

Oh dear, I used angle brackets – is there a way to correct that?

I don’t know of a way…your solution, post scripty stuff, is probably the way I’d go.

Thanks for the quick reply!

If you tell me that I haven’t overlooked some obvious shortcut, then I can live with it 🙂

Good night!

Sabine

This Power Shell script changes the file extension to the one you want.

(You should be in that specific directory for this to work. Otherwise, you’ll have to specify the directory path as well)

Dir *.ldr | rename-item -newname { $_.name -replace “.ldr”,”.gz” }

I tried the above but I didn’t get a separate file for each blob – just one file for each row. Based on your description I was hoping to get one file per row plus an extra file per blob in each row. Did I misunderstand what this is supposed to provide?