I don’t have SQLcl yet, DOWNLOAD it!

Question from the ‘comments’ today:

I would like to have a parameter for dataload WITHOUT column names having to be in the 1st line.

Simply load the columns in the order they are in the txt/csv file.

Often delivered or generated txt files have no header information at all and you still know how to handle them (you know the target table AND the source file structures and that they must match.)

No joke, I really have such use cases and have (yet) to “construct” the first line (column names) by concat a “default” file and the data file. ugly…

a customer…

When I get these questions, I just love being able to say, “we can in fact to just that!”

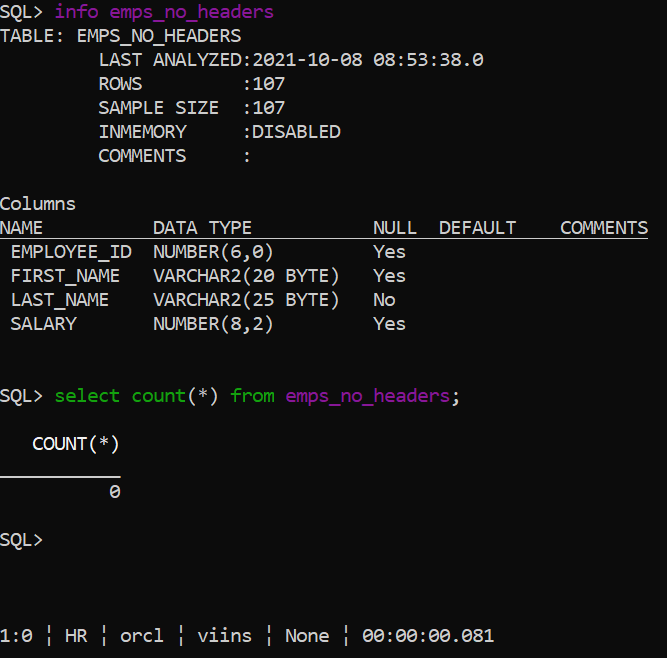

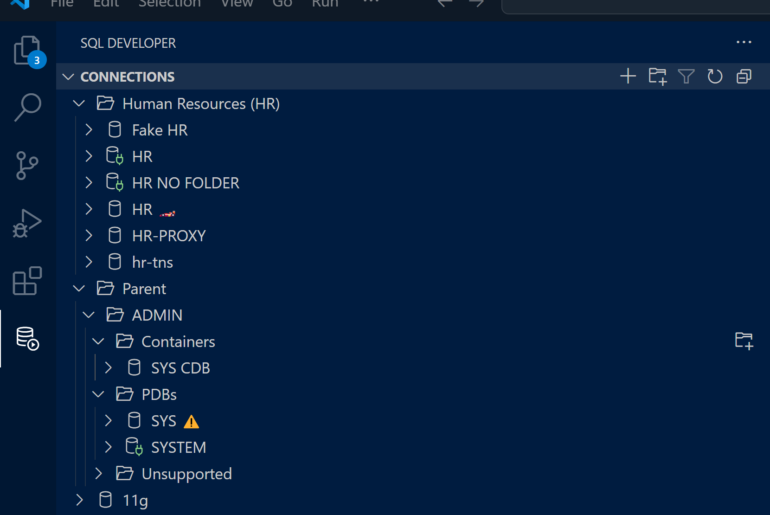

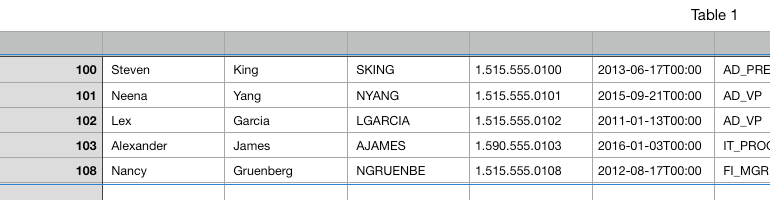

Our TABLE

If we are going to load data, we’re going to need a table. Let’s build a quick-n-dirty copy of HR.EMPLOYEES.

CREATE TABLE emps_no_headers2 AS SELECT employee_id, first_name, last_name, salary FROM employees WHERE 1 = 2;

Our new table looks like this –

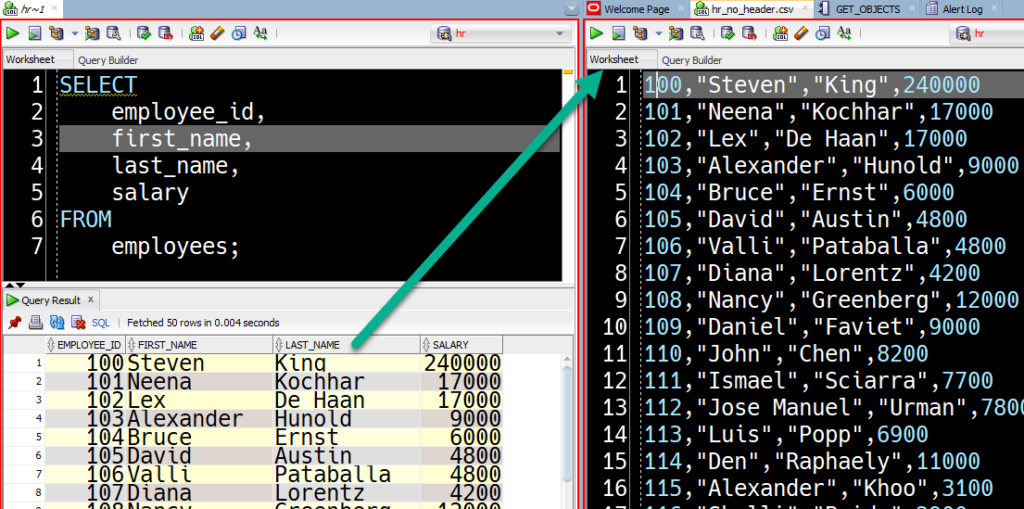

To load CSV, we’re going to need CSV. Let’s generate the CSV from our existing EMPLOYEES table.

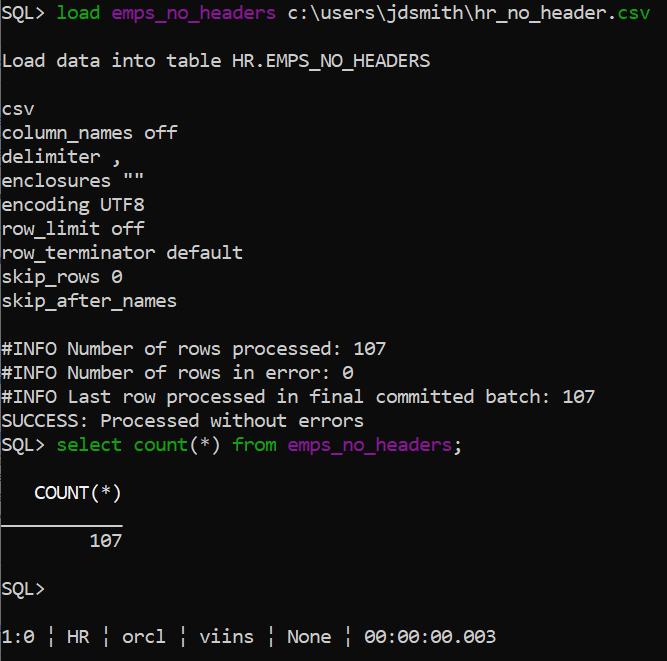

Now the default behavior when using our command to load delimited data is to treat the first line of the incoming data as the list of COLUMN headers for the table, and use that to map which items in the data being streamed goes to which column in the table being populated by SQLcl.

The command is called LOAD.

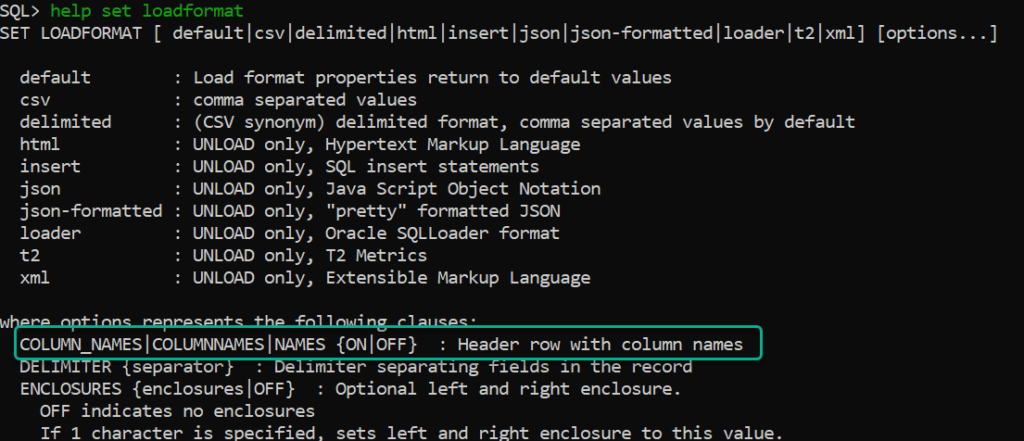

LOAD has two sets of options:

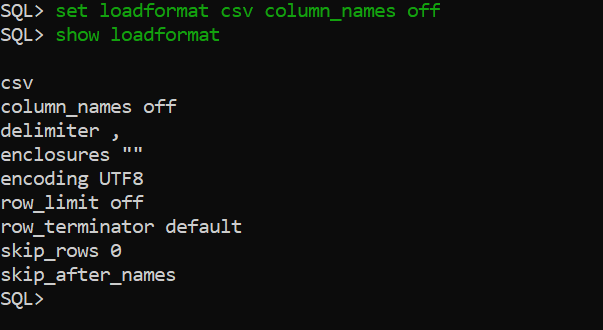

- set loadformat – how the data being imported will be processed

- set load – what and how the load operation will actually take place

We want to tell SQLcl to NOT expect column headers in our CSV, so we’re going to use ‘set loadformat’.

Your Load Options

Hi friends in Europe! I know what you are thinking. You can change the delimiter(;) !

Want some help? Just ask for it…

Loading the table

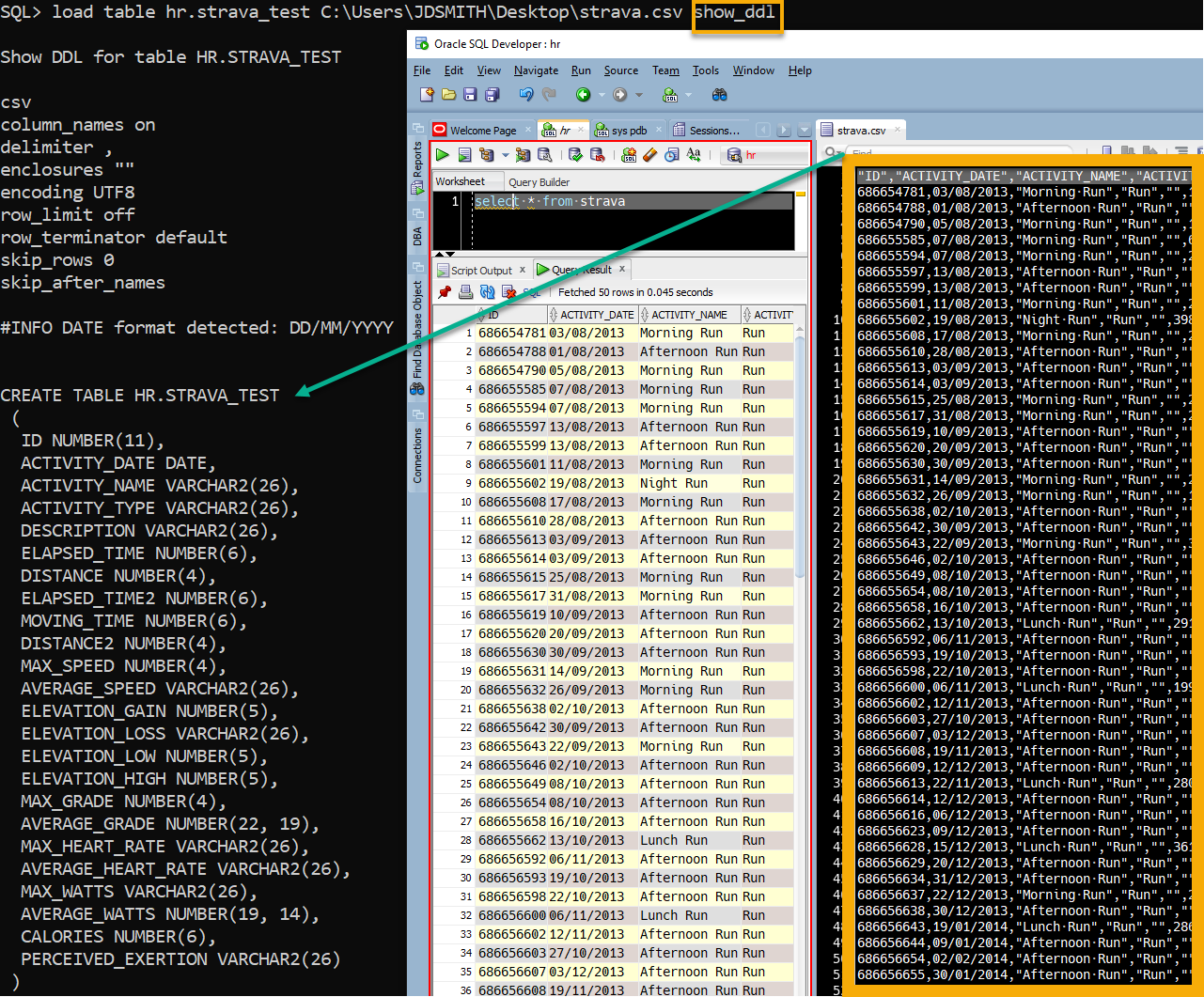

Let’s do a trick! Let’s take a CSV and get DDL for it.

Maybe you don’t have a table yet, you JUST have a CSV file. And THIS CSV file does have column headers. Let’s see what SQLcl 21.3 can do with that.

So I have a CSV. And I WANT a table from that. I can use the GUI of course. But we’re DEVS, no mouse, no mouse!

We’ll scan the data, look for max column widths, date formats, etc, and rename columns that aren’t legal/valid for an Oracle schema. That ‘show_ddl’ bit is new for 21.3, and says, just show me what you WOULD do, without actually doing it.

4 Comments

Thanks Jeff for the nice Tip.

Played with this feature on my local 21c XE instance – here is what i get.

Can you help us to understand why we got only 13 rows loaded instead of 14 rows from the file ?

demo@XEPDB1> $ type c:\users\admin\emp_csv_without_header.txt

7698,”BLAKE”,”MANAGER”,7839,01-MAY-1981,2850,,30

7566,”JONES”,”MANAGER”,7839,02-APR-1981,2975,,20

7788,”SCOTT”,”ANALYST”,7566,19-APR-1987,3000,,20

7902,”FORD”,”ANALYST”,7566,03-DEC-1981,3000,,20

7369,”SMITH”,”CLERK”,7902,17-DEC-1980,800,,20

7499,”ALLEN”,”SALESMAN”,7698,20-FEB-1981,1600,300,30

7521,”WARD”,”SALESMAN”,7698,22-FEB-1981,1250,500,30

7654,”MARTIN”,”SALESMAN”,7698,28-SEP-1981,1250,1400,30

7844,”TURNER”,”SALESMAN”,7698,08-SEP-1981,1500,0,30

7876,”ADAMS”,”CLERK”,7788,23-MAY-1987,1100,,20

7900,”JAMES”,”CLERK”,7698,03-DEC-1981,950,,30

7839,”KING”,”PRESIDENT”,,17-NOV-1981,5000,,10

7782,”CLARK”,”MANAGER”,7839,09-JUN-1981,2450,,10

7934,”MILLER”,”CLERK”,7782,23-JAN-1982,1300,,10

demo@XEPDB1> truncate table emp2;

Table EMP2 truncated.

demo@XEPDB1> load emp2 c:\users\admin\emp_csv_without_header.txt

Load data into table DEMO.EMP2

csv

column_names on

delimiter ,

enclosures “”

encoding UTF8

row_limit off

row_terminator default

skip_rows 0

skip_after_names

#ERROR Unable to scan data.

#ERROR Index -1 out of bounds for length 8

#INFO Number of rows processed: 13

#INFO Number of rows in error: 0

#INFO Last row processed in final committed batch: 13

SUCCESS: Processed without errors

demo@XEPDB1> select count(*) from emp2;

COUNT(*)

___________

13

demo@XEPDB1>

Hey,

just a remark – indirectly linked to the SQLcl too – would like to have the possibility to see private temporary tables in connection tree and to import data into those.

As of now, I have to choose the option that creates the script with insert statements, which has to be tweaked with the correct/wanted PTT table name to insert data into the private temporary table.

I need this many times – I get a .csv file, need to import data into a PTT and do some checks/DML against other tables. As this is the prime use scenario for private temporary tables, it would be nice if it is directly supported.

Kind regards, Aleksander

Hi Jeff,

I was just trying to load a CSV file into a table with the new SQLcl, but I ran into a problem because there are a couple of “not null” columns in the table that are populated by either sequences or triggers and SQLcl does not seem to want to let me omit those columns from the CSV file. I can’t find a “SET LOAD” parameter to turn that behavior off.

#ERROR Omitted columns must be nullable

Thanks.

You need to have your table (if using IDENTITY) defined such that the column is populated on null…so SQLcl submits nothing for the column NULL, and the db sees that and fires the trigger to do the new value. Similar scenario here…BY DEFAULT ON NULL