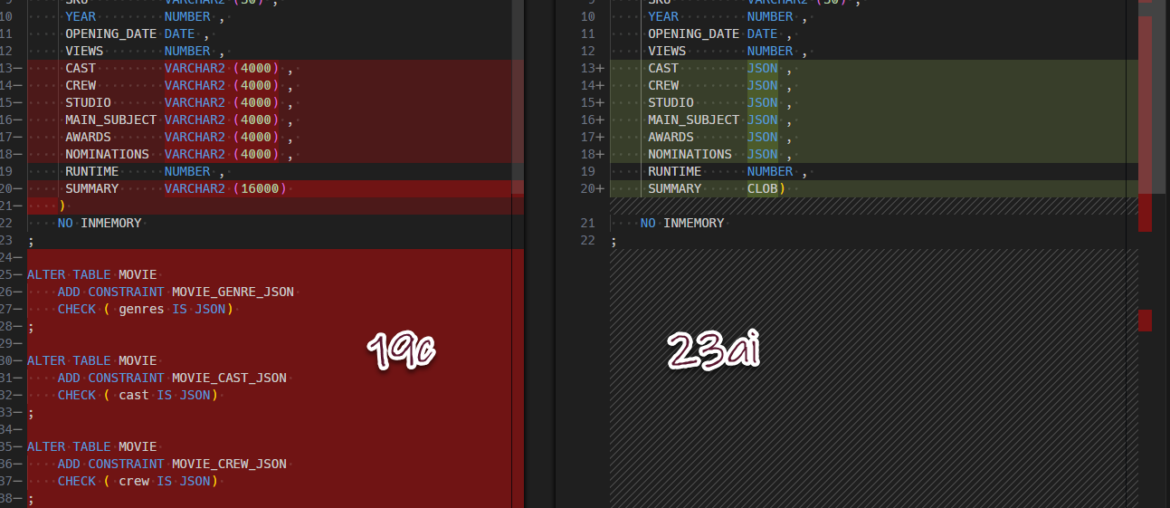

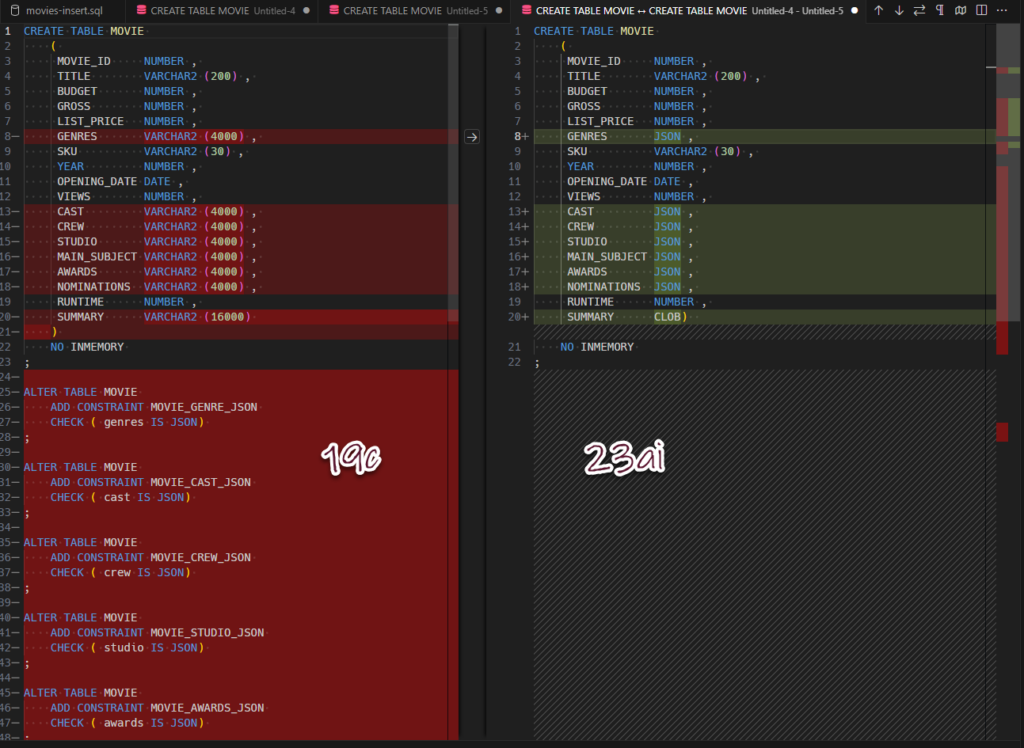

If you’re storing JSON objects, arrays, arrays of objects, etc in your Oracle Database relational tables, you’ve had options.

- VARCHAR2s, with a CHECK CONSTRAINT

- CLOBS, with a CHECK CONSTRAINT

- BLOBS, with a CHECK CONSTRAINT (the most performant of the 3)

The CHECK constraint would enforce an ‘IS_JSON’ rule, and it had several variations, on just how strict that would be.

in 21c and now in 23ai versions of the Database there is a 4th option that you’ll want to take advantage of, and that’s just the native JSON type.

Upgrading to 23ai and moving your JSON data

You have a few options. Tim Hall aka Oracle-BASE demonstrates here on how to just create brand new columns, copying the data over, then dropping the old column, and renaming the new one.

I took a similar track to Tim where I just created my new table and SELECT the old data into the new table, and now my JSON data is stored in a JSON column instead of a VARCHAR2 – and no TO_JSON() function is required, just a straight-up, SELECT.

create table movie_parts ( -- partial table just for demo reasons

title varchar2(100)

, cast json

, genre json

, awards json

);

insert into movie_parts (

title

, cast

, genre

, awards

)

select title

, cast -- json data stored in a VARCHAR2, via movies table

, genre -- json data stored in a VARCHAR2, via movies table

, awards -- json data stored in a VARCHAR2, via movies table

from movies;If you’re thinking, surely this is documented, right? You would be right, and don’t call me Shirely.

If you’re dealing with a LOT of data or if you need to do this with NO down time, then have a look at the JSON Developer’s Guide, they have an entire section detailing how this can be one (Data Pump, DBMS_REDEFINITION, CTAS, …)

It’s worth the effort to properly store this data, one good reason is performance. Here’s a great video on the topic with some colleagues of mine – HIGHLY RECOMMENDED.

Let’s look at an AutoREST or SELECT * FROM set of REST APIs with IS_JSON CHECK Constraints vs JSON native types

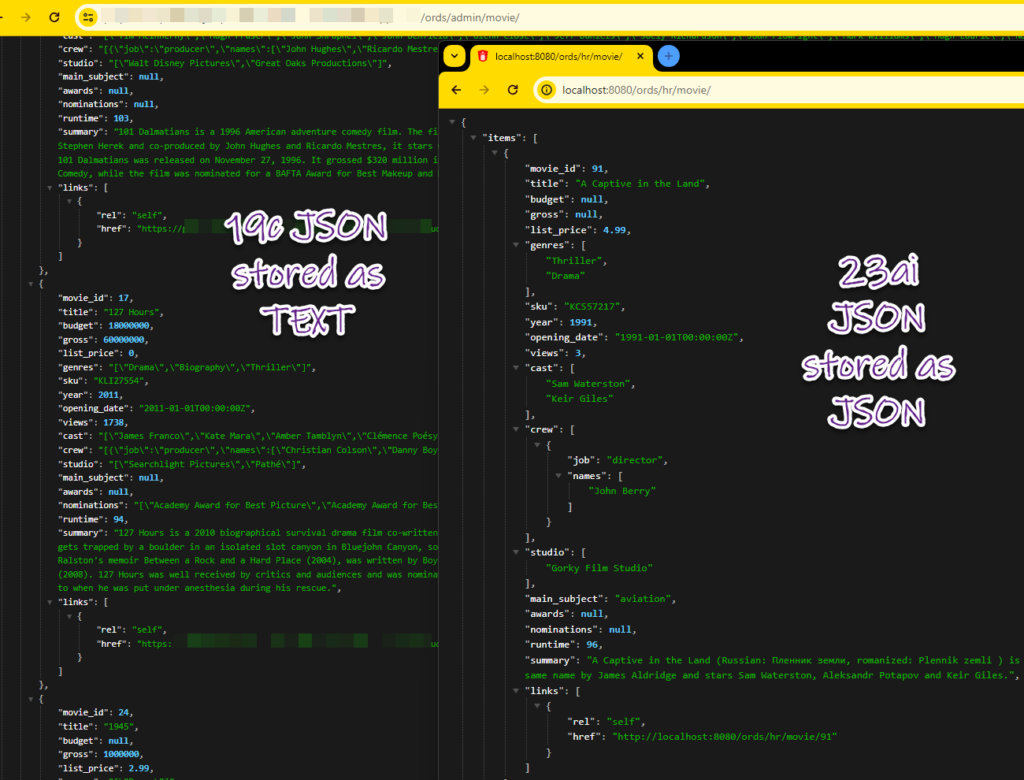

So if I take the same two sets of data, and express one in 19c as VARCHAR/CLOB/BLOB with a IS_JSON CHECK CONSTRAINT vs a 23ai database as JSON native type…REST enabling those tables, the results look like so –

When we get the data from 19c, there’s no way for us (ORDS) to know that some of those attributes are JSON, so we see text, and try to convert them to JSON…so you end up with a bunch of escaped text. Arrays don’t show as arrays, etc.

Whereas in 23ai, the data source is returned to ORDS, and JSON columns are advertised as JSON, so ORDS knows a JSON conversion is NOT necessary.

What if you’re still on 19c?

No worries, you can still work with JSON in your tables and your REST APIs, you simply need to create a REST MODULE, and in your HANDLER code, alias the columns (or plsql parameters) with a leading “{}” string.

I demonstrate this here:

- no escaping JSON in SQL based APIs

- no escaping JSON in PLSQL based APIs…oh snap?!??

I was going to reference the blog post I wrote showing how to do the {} trick for PL/SQL sources vs SQL…but I never wrote that.

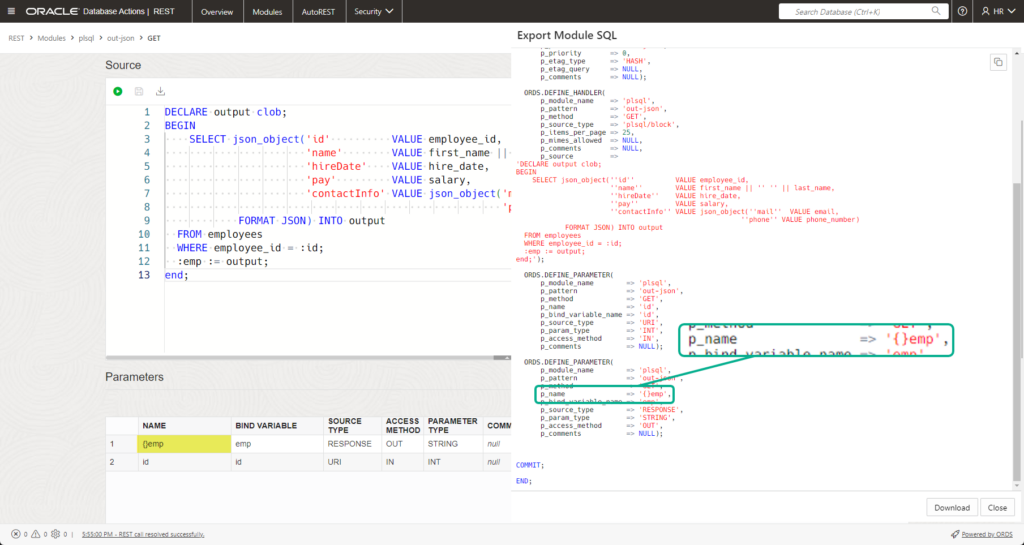

Ok so right quick, when coding up a PL/SQL handler, you’re going to be running some SQL, and probably putting the results of that query into a VARIABLE.

When you define your PL/SQL parameter to tie the variable to an OUTPUT on your REST API, alias the variable with the same leading “{}” that we do on SQL based endpoints and the JSON columns.

In this API, I have a PL/SQL GET endpoint that runs a PL/SQL block to generate some JSON response using the JSON_OBJECT function, and I assign the result of that to my :emp bind variable, which has a corresponding “{}emp” parameter for the REST API.

So let’s look at see what happens when you run this with and without the “{}” prefix on the output parameter.

The main takeaway here, is once you’re using 23ai, you’ll have MUCH less work when it comes to developing and maintaining your REST APIs!

One last thing.

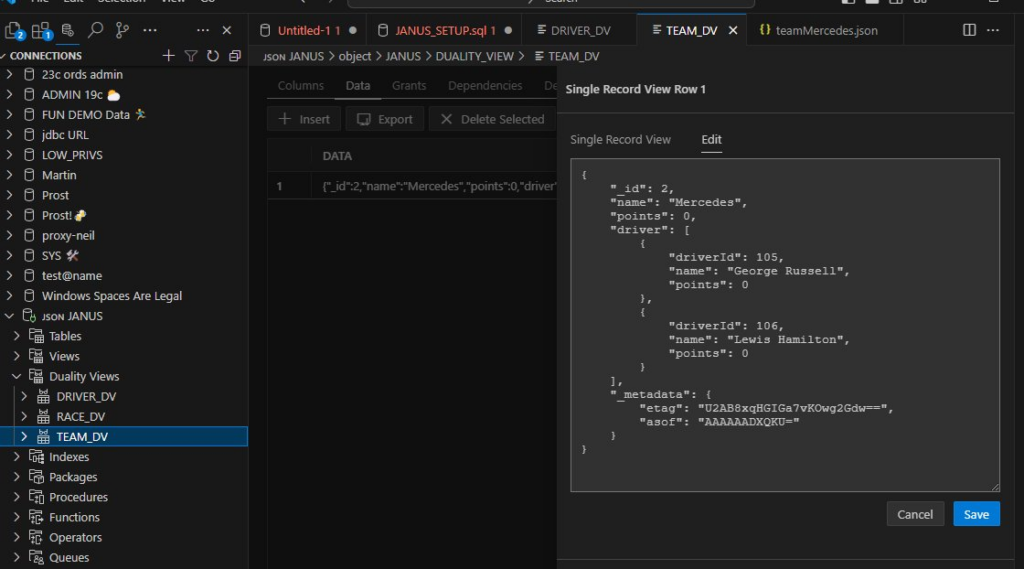

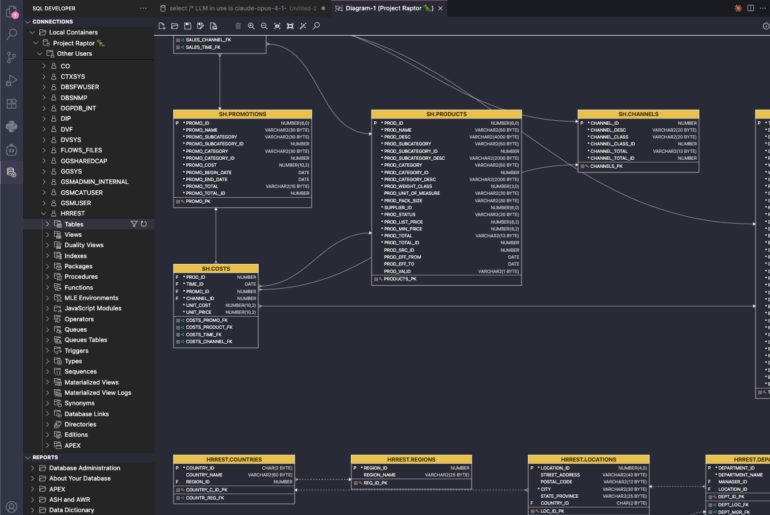

23ai JSON Duality Views

Don’t forget about our new JSON Relational Duality Feature! This allows you take the best of document and relational storage methods, with either SQL or REST access methods.

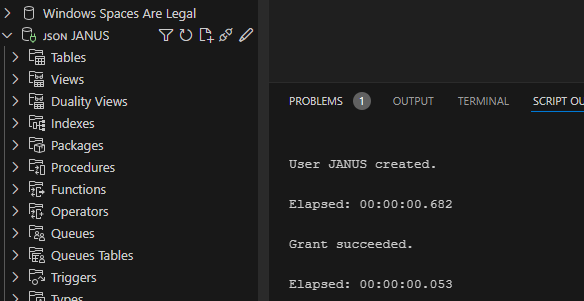

I updated a few blog posts and our official whitepaper (link pending), that demonstrates ou our REST API support for the Duality Views, and I did this work with our VS Code Extension, which already has nice support for JSON and Duality Views.

JANUS is the demo schema we use.

My connection name has emoji J, S, O, and N leading characters.

Built-in Pretty Print